Intel’s Fourth Graphics Attempt | IEEE Computer Society

[ad_1]

![]() Intel surprised the industry in 2018 when word got out that the company would launch a discrete GPU product line. Intel had tried to enter the discrete graphics chip market before and, with the failure of the infamous i740 in 1999, vowed never again. But it was a socket Intel didn’t have, so they gave it another go and launched the Larrabee project in 2007.

Intel surprised the industry in 2018 when word got out that the company would launch a discrete GPU product line. Intel had tried to enter the discrete graphics chip market before and, with the failure of the infamous i740 in 1999, vowed never again. But it was a socket Intel didn’t have, so they gave it another go and launched the Larrabee project in 2007.

After three years of bombast, Intel shocked the world by canceling Larrabee. Instead of launching the chip in the consumer market, Intel will make it available as a software development platform for both internal and external developers. Those developers can use it to develop software that can run in high-performance computers.

“Larrabee silicon and software development are behind where we hoped to be at this point in the project,” said Intel spokesperson Nick Knupffe. “As a result, our first Larrabee product will not be launched as a standalone discrete graphics product.” (December 4, 2009.)

How it began

Intel launched the Larrabee project in 2005, code-named SMAC. Paul Otellini, Intel’s CEO, hinted about the project in 2007 during his Intel Developer’s Forum (IDF) keynote. Otellini said it would be a 2010 release and compete against AMD and Nvidia in the realm of high-end graphics.

Intel announced Larrabee in 2008 and in early August at Siggraph. Then at the Hot Chips conference in late August and at the IDF in mid-August. The company said Larrabee would have dozens of small, in-order x86 cores and run as many as 64 threads. The chip would be a coprocessor suitable for graphics processing or scientific computing. Intel said, at the time, programmers could, at any given time, decide how they would use those cores.

Want More Tech News? Subscribe to ComputingEdge Newsletter Today!

By 2007, the industry was pretty much aware of Larrabee, although details were scarce and only came dribbling out from various Intel events around the world. In August of 2007 it was known that the product would be x86, capable of performing graphics functions like a GPU, but not a “GPU” in the sense that we know them and was expected to show up some time in 2010, probably 2H’10.

Speculation about the device, in particular how many cores it would have, entertained the industry, and maybe employed a few pundits, and several stories appeared to add to the confusion. Our favorite depiction of the device was the blind men feeling the elephant – everyone (outside Intel) claimed to know exactly what it was – and no one knew what it was beyond the tiny bits they were told.

What we did know was that it would be a many-core product (“Many” means something over 16 that year), would have a ring-communications system, hardware texture processors (a concession to the advantages of an ASIC), and a really big coherent cache. But most importantly it would be a bunch of familiar x86 processors, and those processors would have world-class floating point capabilities with 512 bit vector units and quad threading.

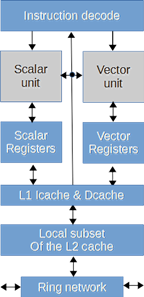

The diagram was symbolic of the number of CPU cores and the number and type of coprocessors and I/O blocks, which were implementation-dependent, as were the locations of the CPU and non-CPU blocks on the chip. Each core could access a subset of a coherent L2 cache to provide high bandwidth access and simplify data sharing and synchronization.

Larrabee’s programmability offered support for traditional graphics APIs such as DirectX and OpenGL via tile-based deferred rendering that ran as software layers. Intel ran the renderers using a tile-based deferred rendering approach. Tile-based deferred rendering can be very bandwidth-efficient, but it presented some compatibility problems at that time—the PCs of the day were not using tiling.

Larrabee CPU core and associated system blocks. The CPU was a Pentium processor in-order design, plus 64-bit instructions, multithreading, and a wide vector processor unit (VPU).

Each core had fast access to its 256 kB local subset of the coherent second-level cache. The L1 cache sizes were 32 kB for I-cache and 32 kB for D-cache. Ring network accesses passed through the L2 cache for coherency. Intel manufactured the Knights Ferry chip in its 45 nm high-performance process and the Knights Corner chip in 22 nm.

Who asked for Larrabee anyway?

Troubled by the GPU’s gain in the share of the silicon budget in PCs, which came at the expense of the CPU, Intel sought to counter the GPU with its own GPU-like offering.

There has never been anything like it and as such there were barriers to break and frontiers to cross, but it was all Intel’s thing. It was a project. Not a product. Not a commitment, not a contract, it was and is, an in-house project. Intel didn’t owe anybody anything. Sure, they bragged about how great it was going to be, and maybe made some ill-advised claims about performance or schedules, but so what? It was for all intents purposes an Intel project – a test bed, some might say a paper tiger, but the demonstration of silicon would make that a hard position to support.

So last week most of the program managers had tossed their red flags on the floor and said it’s over. We can’t do what we want to do in the time frame we want to do it. And, the unspoken subtext was, we’re not going to allow another i740 to ever come out of Intel ever again.

Now the bean counters took over. What was/is the business opportunity for Larrabee? What is the ROI? Why are we doing this? What happens if we don’t? A note about companies – this kind of brutal, kill your darling’s examination is the real strength of a company.

Larrabee as it had been positioned to date would die. It would not meet Intel’s criteria for price-performance-power in the window it was projected – it still needed work – like Edward Scissorhands -it wasn’t finished yet. However, the company has stated that it still plans to launch a many-core discrete graphics product but won’t be saying anything about that future product until sometime next year.

And so it was stopped. Not killed – stopped.

Act II

Intel invested a lot in Larrabee in dollars, time, reputation, dreams and ambitions, and exploration. None of that is lost. It doesn’t vanish. Rather, that work provides the building blocks for the next phase. Intel has not changed its investment commitment on Larrabee. No one has been fired, transferred or time shared. In fact there are still open reqs.

Intel has built and learned a lot. More maybe than they originally anticipated. Larrabee is an interesting architecture. It has a serious potential and opportunity as a significant co-processor in HPC, and we believe Intel will pursue that opportunity. They call it, “throughput computing.” We call “throughput computing” a YAIT; yet another Intel term.

So the threat of Larrabee to the GPU suppliers shifts from the graphics arena to the HPC arena – more comfortable territory for Intel.

Intel used the Larrabee chip for its Knights series MIC (many integrated core) coprocessors. Former Larrabee team member Tom Forsyth said, “They were the exact same chip on very nearly the exact same board. As I recall, the only physical difference was that one of them did not have a DVI connector soldered onto it.”

Knights Ferry had a die size of 684 mm² and a transistor count of 2,300 million—a large chip. It had 256 shading units, 32 texture mapping units, and 4 ROPS, and it supported DirectX 11.1. For GPU compute applications, it was compatible with OpenCL version 1.2.

The cores had a 512-bit vector processing unit, able to process 16 single-precision, floating-point numbers simultaneously. Larrabee was different from the conventional GPUs of the day. Larrabee used the x86 instruction set with specific extensions. It had cache coherency across all its cores. It performed tasks like z-buffering, clipping, and blending in software using a tile-based rendering approach (refer to the simplified DirectX pipeline diagram in Book two). Knights Ferry, aka Larrabee 1, was mainly an engineering sample, but a few went out as developer devices. Knights Ferry D-step, aka Larrabee 1.5, looked like it could be a proper development vehicle. The Intel team had lots of discussions about whether to sell it and, in the end, decided not to. Finally, Knights Corner, aka Larrabee 2, was sold as XeonPhi.

Next?

That was not the end of Larrabee as a graphics processor – it was a pause. If you build GPUs enjoy your summer vacation, the lessons will begin again.

Or will they?

Maybe the question should be – why should they?

Remember how we got started – one of the issues was the gain in silicon budget in PCs by the GPU at the expense of the CPU. There are multiple parameters on that including:

- Revenue share of the OEM’s silicon budget

- Unit share on CPU-GPU shipments

- Mind share of investors and consumers

- Subsequent share price on all of the above.

The discrete GPU unit shipments had a low growth rate. ATI and Nvidia hope to offset that with GPU compute sales; however, those markets will be slower to grow than have been the gaming and mainstream graphics markets of the past ten years.

Why would any company invest millions of dollars to be the third supplier in a flat to low growth market? One answer is the ASP and margins are very good. It’s better for the bottom line, and hence the PE to sell a few really high valued parts than a zillion low margin parts.

Why bother with discrete?

GPU functionality is going in with the CPU. It’s a natural progression of integration – putting more functionality in the same package. The following year we saw the first implementations. They were mainstream in terms of their performance and were not serious competition in terms of performance to the discrete GPUs, but they further eroded the low end of the discrete realm. Just as IGPs stole the value segment from discrete, embedded graphics in the CPU took away the value and mainstream segments, and even encroach on the lower segments of the performance range.

That meant the unit market share of discrete GPUs would decline further. That being the case, what is the argument for investing in that market?

Conclusion

Intel made a hard decision and a correct one at the time. Larrabee silicon was pretty much proven, and the demonstration at SC09 of measured performance hitting 1 TFLOPS (albeit with a little clock tweaking) on a SGEMM Performance test (4K by 4K Matrix Multiply) was impressive. Interestingly it was a computer measurement, not a graphics measurement. Maybe the die had been cast then (no pun intended) about Larrabee’s future.

Intel’s next move was to make Larrabee available as an HPC SKU software development platform for both internal and external developers. Those developers could use it to create software that could run in high-performance computers.

That left the door open for Intel to take a second run at the graphics processor market. The nexus of compute and visualization, something we discussed at Siggraph was clearly upon us, and it’s too big and too important for Intel not to participate in all aspects of it.

Although Intel disparaged the GPU every chance it got, it always wanted one and tried a few times before Larrabee (e.g., 82768, i860, i740). In 2018, it kicked off another GPU project code -Xe.

Epilog

In early November 2022, u/Fuzzy posted at LinusTechTips that he/she got a recently acquired Intel Larrabee test AIB and managed to boot it on Windows 10. Got the Intel Larrabee Working : LinusTechTips.

Source: LinusTechTips

And if you’re interested in the complete history and future trends of GPUs, check out Dr. Peddie’s three-book series coming out this month.

[ad_2]

Source link